Scalable and Performant ASP.NET Core Web APIs: Importing Data

This is our last post in our series of posts on creating performant and scalable web APIs using ASP.NET Core. In this post, we’ll look at high volume data imports …

Often when a customer adopts our product, they will need to get some existing data into it. Ideally a frontend import facility would just leverage APIs that the rest of the product uses in order to import the data. The problem is that those APIs will likely have been built to POST single records and trying to use those APIs to insert millions of records simply won’t cut it.

Benchmark

Let’s pretend we need to get just 1000 contacts into our system through our API. Our API contoller is below - we are again using Dapper as our data access library. We’ll be posting and inserting the contacts one at a time to start off with.

[Route("api/contacts")]

public class ContactController : Controller

{

private readonly string _connectionString;

public ContactController(IConfiguration configuration)

{

_connectionString = configuration.GetConnectionString("DefaultConnection");

}

[HttpPost]

public async Task<IActionResult> PostSingle([FromBody] ContactInsert contact)

{

using (SqlConnection connection = new SqlConnection(_connectionString))

{

connection.Open();

string sql = @"INSERT INTO Contact(ContactId, Title, FirstName, Surname)

VALUES(@ContactId, @Title, @FirstName, @Surname)";

await connection.ExecuteAsync(sql, contact);

}

return Ok(new { ContactId = contact.ContactId });

}

}

public class ContactInsert

{

public Guid ContactId { get; set; } = Guid.NewGuid();

public string Title { get; set; }

public string FirstName { get; set; }

public string Surname { get; set; }

}We’re going to import the data through our API via a console app. We are generating 1000 contacts and pushing them through our API one at a time.

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Enter number of records to import:");

int recordCount = Convert.ToInt32(Console.ReadLine());

Console.WriteLine("Importing - please wait ...");

Import import = new Import();

long timeTaken = import.SingleRequests(recordCount).GetAwaiter().GetResult();

Console.WriteLine($"Took {timeTaken}ms");

Console.WriteLine("");

Console.WriteLine("Import finished!");

Console.ReadLine();

}

}

class Import

{

public async Task<long> SingleRequests(int recordCount)

{

List<Contact> contacts = GetContacts(recordCount);

Stopwatch stopwatch = Stopwatch.StartNew();

HttpClient client = new HttpClient();

client.BaseAddress = new Uri("http://localhost:7454/");

client.DefaultRequestHeaders.Accept.Clear();

client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json"));

foreach(Contact contact in contacts)

{

HttpResponseMessage response = await client.PostAsJsonAsync("api/contacts", contact);

response.EnsureSuccessStatusCode();

}

stopwatch.Stop();

return stopwatch.ElapsedMilliseconds;

}

private List<Contact> GetContacts(int recordCount)

{

List<Contact> contacts = new List<Contact>();

for (int i = 1; i <= recordCount; i++)

{

contacts.Add(new Contact()

{

Title = "Mrs",

FirstName = $"FirstName{i}",

Surname = $"Surname{i}"

});

}

return contacts;

}

}

class Contact

{

public string Title { get; set; }

public string FirstName { get; set; }

public string Surname { get; set; }

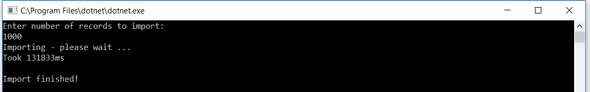

}If we run the console app we get:

So it took 131 seconds to import just 1000 contacts. Let’s see if we can improve that …

Posting a collection

An improvement would be to be able to POST all 1000 contacts to our API in a single request. The following API action method allows us to do this:

[HttpPost("collection")]

public async Task<IActionResult> PostCollection([FromBody] IEnumerable<ContactInsert> contacts)

{

List<Guid> contactIds = new List<Guid>();

using (SqlConnection connection = new SqlConnection(_connectionString))

{

connection.Open();

string sql = @"INSERT INTO Contact(ContactId, Title, FirstName, Surname)

VALUES(@ContactId, @Title, @FirstName, @Surname)";

foreach(ContactInsert contact in contacts)

{

await connection.ExecuteAsync(sql, contact);

contactIds.Add(contact.ContactId);

}

}

return Ok(contactIds);

}Here are the changes that allow our console app to use this new method of importing:

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Enter number of records to import:");

int recordCount = Convert.ToInt32(Console.ReadLine());

Console.WriteLine("Importing - please wait ...");

Import import = new Import();

//long timeTaken = import.SingleRequests(recordCount).GetAwaiter().GetResult();

long timeTaken = import.Collection(recordCount).GetAwaiter().GetResult();

Console.WriteLine($"Took {timeTaken}ms");

Console.WriteLine("");

Console.WriteLine("Import finished!");

Console.ReadLine();

}

}

class Import

{

...

public async Task<long> Collection(int recordCount) { List<Contact> contacts = GetContacts(recordCount); Stopwatch stopwatch = Stopwatch.StartNew(); HttpClient client = new HttpClient(); client.BaseAddress = new Uri("http://localhost:7454/"); client.DefaultRequestHeaders.Accept.Clear(); client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json")); HttpResponseMessage response = await client.PostAsJsonAsync("api/contacts/collection", contacts); response.EnsureSuccessStatusCode(); stopwatch.Stop(); return stopwatch.ElapsedMilliseconds; }

...

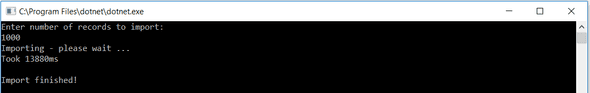

}This time we are able to load 1000 contacts in 13 seconds.

A pretty good improvement but can we do any better?

Inserting in bulk

Although our API takes a collection of contacts in the request, it still inserts them one by one into the database. If we insert them into the database using SqlBulkCopy we should be able to make the process even faster.

Here’s a new action method that does this:

[HttpPost("bulk")]

public IActionResult PostBulk([FromBody] IEnumerable<ContactInsert> contacts)

{

List<Guid> contactIds = new List<Guid>();

using (SqlConnection connection = new SqlConnection(_connectionString))

{

connection.Open();

using (SqlBulkCopy bulkCopy = new SqlBulkCopy(connection))

{

bulkCopy.DestinationTableName = "dbo.Contact";

bulkCopy.ColumnMappings.Add("ContactId", "ContactId");

bulkCopy.ColumnMappings.Add("Title", "Title");

bulkCopy.ColumnMappings.Add("FirstName", "FirstName");

bulkCopy.ColumnMappings.Add("Surname", "Surname");

DataTable dt = new DataTable();

dt.Columns.Add(new DataColumn("ContactId", typeof(Guid)));

dt.Columns.Add(new DataColumn("Title"));

dt.Columns.Add(new DataColumn("FirstName"));

dt.Columns.Add(new DataColumn("Surname"));

foreach (ContactInsert contact in contacts)

{

DataRow row = dt.NewRow();

row["ContactId"] = contact.ContactId;

row["Title"] = contact.Title;

row["FirstName"] = contact.FirstName;

row["Surname"] = contact.Surname;

dt.Rows.Add(row);

}

bulkCopy.WriteToServer(dt);

}

}

return Ok(contactIds);

}Here are the changes that allow our console app to use this new method of importing:

class Program

{

static void Main(string[] args)

{

Console.WriteLine("Enter number of records to import:");

int recordCount = Convert.ToInt32(Console.ReadLine());

Console.WriteLine("Importing - please wait ...");

Import import = new Import();

//long timeTaken = import.SingleRequests(recordCount).GetAwaiter().GetResult();

//long timeTaken = import.Collection(recordCount).GetAwaiter().GetResult();

long timeTaken = import.Bulk(recordCount).GetAwaiter().GetResult();

Console.WriteLine($"Took {timeTaken}ms");

Console.WriteLine("");

Console.WriteLine("Import finished!");

Console.ReadLine();

}

}

class Import

{

...

public async Task<long> Bulk(int recordCount) { List<Contact> contacts = GetContacts(recordCount); Stopwatch stopwatch = Stopwatch.StartNew(); HttpClient client = new HttpClient(); client.BaseAddress = new Uri("http://localhost:7454/"); client.DefaultRequestHeaders.Accept.Clear(); client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json")); HttpResponseMessage response = await client.PostAsJsonAsync("api/contacts/bulk", contacts); response.EnsureSuccessStatusCode(); stopwatch.Stop(); return stopwatch.ElapsedMilliseconds; }

...

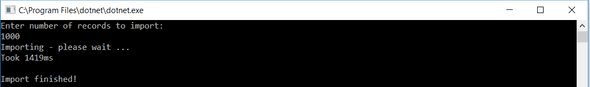

}If we try this method, it takes just over 1 second to do the import:

Conclusion

If our system needs to cope with large volume imports - leveraging single record APIs won’t cut it for large data volumes. It’s well worth creating specific APIs for importing large volumes of data that take collections of data in the request and then bulk insert into the database.

Comments

David McClelland April 6, 2018

I really enjoyed this series! Thinking about importing data as fast as possible brought to mind another question: what would be the best way to export data as fast as possible? For example, if I need to provide a large resultset, should I lean toward paging or is there a way to stream the result back to the client – kind of like downloading a large file? My particular use case is to return results from my API in response to a query (start date, end date), and that query runs against a SQL server database which hosts many millions of rows.

Carl April 6, 2018

Thanks David. I usually implement both filtering and paging on GET requests on resources with lots of data. It’s a bit of work to implement but if your resource contains millions of records then it’s worth it to minimise the memory your API will consume.

Toby April 6, 2018

Nice article. I would find it really handy to see an example of a bulk upsert with dapper to go with the bulk insert.

Carl April 6, 2018

Thanks Toby. Bulk upserting is a little more involved … I usually bulk insert the data into an intermediate table and then execute an additional SQL statement to the inserts / updates on the live data. If there’s enough interest, I’ll do a future post on this.

Danniel May 27, 2018

Best article I read so far about API import. Well done!

Sankar March 29, 2019

Very good article. I would like to see a Bulk Upsert example as well.

Chandrakant Patil April 23, 2019

Very nice explained.

Devesh Sharma August 8, 2019

Brilliant Article. Many things I was already using but lot I get to know by this Post. Very Informative for any Developer.

If you to learn about using React with ASP.NET Core you might find my book useful: